To begin, let's talk about Euler. Euler was a 17th-century mathematician who was famous, among many other things, for his work on the harmonic series. The harmonic series is defined as the following:

$$\frac11+\frac12+\frac13+\frac14...$$

This series blows up to infinity, and it is relatively simple to prove so. Mathematicians had known such a result for hundreds of years before Euler. But what Euler was concerned with was that same series, but with the denominators raised to an integer power.

$$\frac{1}{1^s}+\frac{1}{2^s}+\frac{1}{3^s}+\frac{1}{4^s}...$$

Euler went on to prove wonderful things about this function, which you can learn more about in my earlier blog post, but it was Riemann who really analyzed this function, and extended it to the complex plane.

So, what is the complex plane? Well, let's first talk about i. The number i is defined as $\sqrt{-1}$. To most people, this seems like an absolute absurdity. How can a negative number be square rooted? There are no solutions to $x^2=-1$! But, to put this in perspective, take another equation. $x+2=0$. Well, obviously, x is -2. But to people in the early centuries, they thought of negative numbers in the way most people think of imaginary numbers (i and multiples of i). We are simply extending our number system in order to provide solutions to an equation. But the real fun comes when we have complex numbers. A complex number is of the form $x+yi$, where $x$ and $y$ are real numbers. Now, remember from your days of schooling, plotting on an x y graph. Well, if we take an x y plane, can't we just treat x and y as x and y in our complex number equation? Here lies the complex plane:

The y is our imaginary numbers, and the x is our real numbers. So if x is 2 and y is -2i, our complex number is 2+-2i.

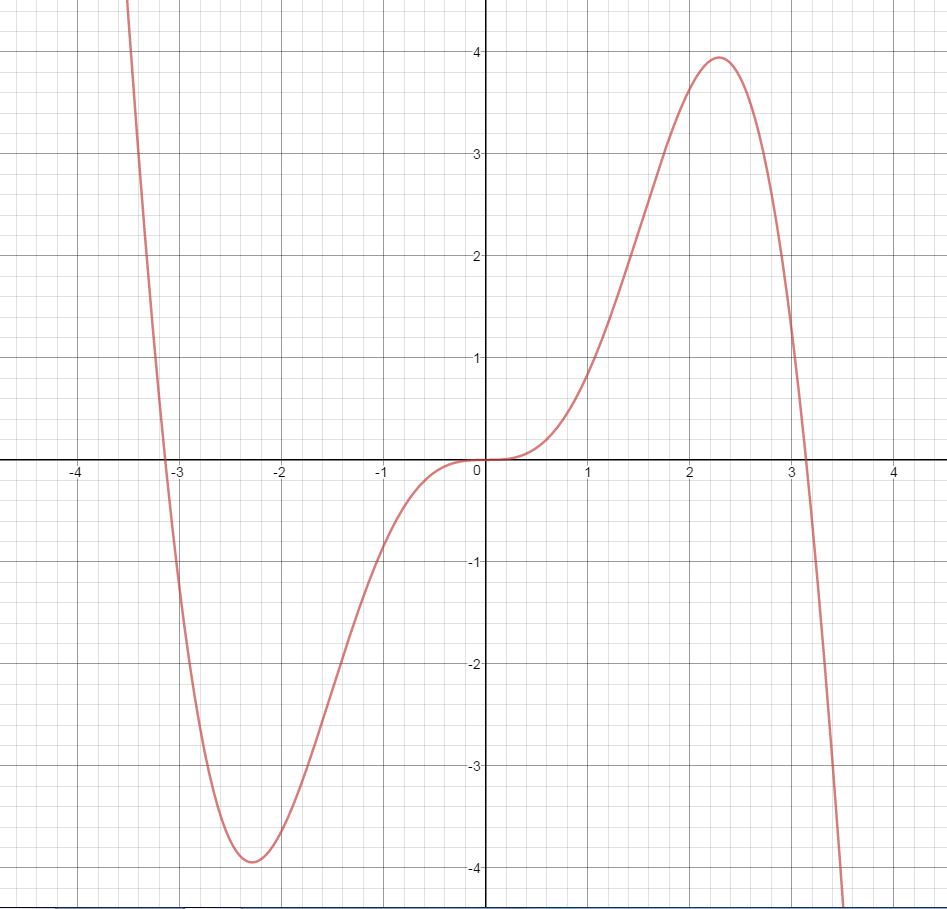

Bringing it back to the Riemann zeta function, Riemann found a genius way to extend the function so that the denominators could be raised to complex number powers. After doing this, he wondered about where this function equaled 0. He knew that if he plugged in any negative even number (-2, -4, -6, etc) into his function, it would be 0. These are called the trivial zeroes of the zeta function. But, looking at the graph of the zeroes, you should see a pattern:

All of these zeroes that are not trivial (called, aptly, nontrivial zeroes), seem to lie on one line, specifically x=1/2. The Riemann Hypothesis is the statement that all the nontrivial zeroes lie on that line. We have searched extremely far, and so far nothing has told us otherwise. We also know more than 40% of the zeroes line on the critical line, and that none lie off the line for less than $10^{20}$.

We are so sure of ourselves that many proofs nowadays start with "Assuming the Riemann hypothesis..." But we are yet to find a proof, or really even come close to one. This is the true Riemann hypothesis, and (perhaps) in my next post I will talk about how it relates to the primes, and some attempts made at cracking it. Thank you so much for reading, and I'll see you in the next post!